I recently watched another Boris FX zoom call/YouTube stream titled “Exploring New Features in Boris FX Silhouette: Live with Netflix’s Kyle Spiker [Boris FX Live #56]” https://www.youtube.com/watch?v=2zlAlYGA9js, hosted by Ben Brownlee from Boris with special guest Kyle Spiker, a VFX artist who is currently a NetFX Artist Experience Specialist at Netflix. Exactly what is “NetFX”? I had wondered that myself, then of course I googled it and found out from the horse’s mouth (Netflixtechblog.com):

“At Netflix, we want to entertain our global membership with series and films from around the world. In line with that, we’re excited to announce NetFX, a cloud-based platform that will make it easier for vendors, artists and creators to connect and collaborate on visual effects (VFX) for our titles.

Visual effects are in almost all of our features and series, ranging from the creation of complex creatures and environments to the removal of objects and backgrounds. NetFX is a cutting-edge platform which will provide collaborators frictionless access to infrastructure to meet Netflix’s demand for VFX services around the world as our library of original content continues to grow.”

Nice! 😀

On the zoom call Kyle Spiker was very informative; I particularly liked it when he really delved into specifics like the bird in the matte painting, bullet shot trajectories (although personally I don’t “hear” silent shots), the importance of stabilizing shots, and painting bad green screens. I had also originally taught myself VFX when Apple had dropped the price of Shake from $3000 to $500 and I immediately went out and bought it. Then I painstakingly taught myself Shake and compositing. When I applied for a compositing job on a film, I had to initially submit a somewhat crude demo reel comprised of some of my Shake experiments/projects. Towards the end of that film project, one of the guys that had worked on it had his own VFX house in CA and was thinking of opening another facility in El Paso, TX, and offered positions to people who had worked on the project. I accepted but at the time was working in the Northeast at a TV station and had to make the same quick decision to drop everything and move to El Paso immediately. Unfortunately his proposed expansion fell through, but luckily before I had actually moved there! But Kyle is right, if a big opportunity is offered to you, take it!

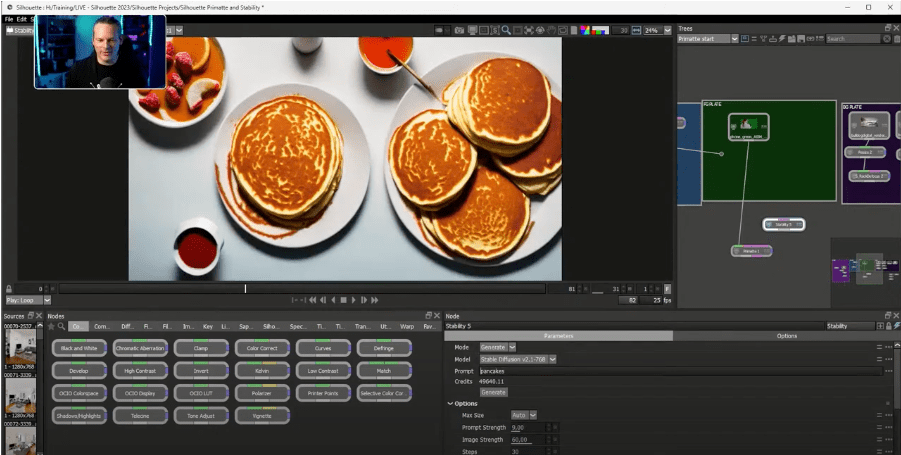

Ben then demonstrated some of this latest version of Silhouette’s amazing new features including the addition of the Ultimatte Keyer but even more amazingly some of the new nodes, including the “Stability” node.

Initially I was confused by the Stability node, “Models”, and “Dreaming” but then I realized that it is actually using so-called generative AI. Admittedly though, Ben had mentioned machine learning in his demonstration but it just sort of slipped by me at first. I had seen demos of OpenAI’s DALL-E on YouTube but hadn’t realized that that kind of tech has actually reached Boris FX. And I hadn’t heard of the company Stability AI (stability.ai), but I just brought myself up to speed on it. They are an open source company that works with Amazon Web Services which gives them access to the world’s fifth-largest supercomputer, the Ezra-1 UltraCluster. In August 2022, they released Stable Diffusion, a pioneering text-to-image model for which they provided the use of Ezra-1. Stable Diffusion 2.0 was subsequently released in November 2022.

During Ben’s demonstration, he asked for a background suggestion for a composite shot, and someone from the Boris “Mission Control” threw out “pancakes”, a silly but delicious notion! 🙂

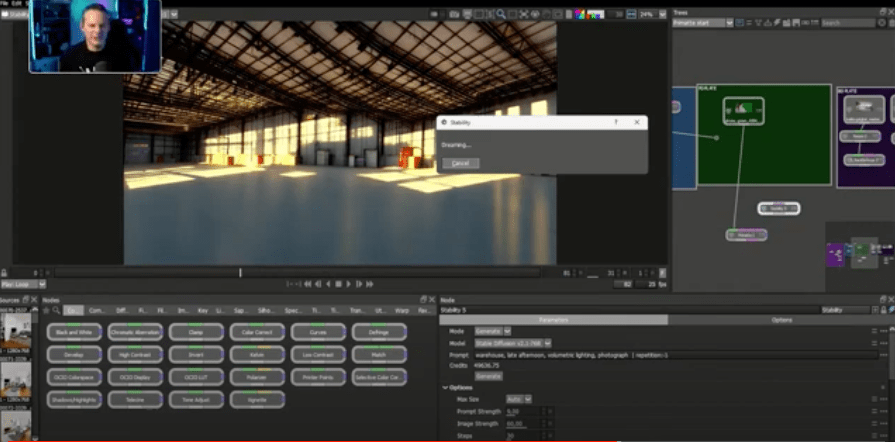

Then Ben used his own production experience to come up with a warehouse location. Amazingly, like DALL-E, the Stability node (connected to Stability AI) can not only understand English language prompts but then can generate various iterations of images in a variety of styles, essentially on the spot.

I discovered that if you set up an account at Stability AI, they’ll give you an API Key and 25 free credits for their “DreamStudio” (the image generator). Actually it is a pretty good deal, because $10 gives you 1000 credits which roughly generates about 5000 images. Not bad!

In my initial investigations of how the Generative AI of Stable Diffusion, DALL-E, etc. actually works I still was mystified by the explanations and was thinking that they can’t possibly work like that. They do. They are actually trained by showing the systems thousands of images from the internet and adding more and more noise to them, rendering them indecipherable. They then have the systems recreate the images in a series of steps so that they can find statistical patterns in them, and associate text descriptions with the images during the noising and denoising phase. (For a full description, head on over the the Big Think website: https://bigthink.com/the-future/dall-e-midjourney-stable-diffusion-models-generative-ai/)

Chris on the live chat had asked: “Do you see any AI based solutions to speed up roto in the future?” Marco Paolini, Silhouette Product Manager, responded by saying that actually the AI solutions for roto actually aren’t as good as a human roto artist yet, which I find amazing since I would’ve thought that roto would be something that AI would be good at, and not spontaneous image generation, exactly the opposite of my preconceptions. Maybe everything I know is wrong. 🤨

Then Ben turned the zoom call over to Boris Trainer Elizabeth Postol, who demonstrated the new grain management techniques in Silhouette.

But even in her demonstration the true power of the Stability node becomes clear when she matter-of-factly showed that she was able to create a mountain using it and composite it into the BG of a shot. Now I’m used to the fractal modeling in Bryce and Vue, but this stuff goes way beyond that in the absolute speed in which the system is able to create images.

Amazing stuff folks.

Leave a comment